In December last year P3 Ambika mounted an exhibition of Terry Flaxton’s work as AHRC Creative Research Fellow at Bristol University, which was concerned with high resolution imaging. The works were mostly large scale moving image projections consisting of group portraits and landscapes, precisely crafted cinematographic vignettes, posed and composed with clear attention to detail, captured with high-end, high definition digital cinema technology.

To complement the exhibition Flaxton invited a number of people over a number of lunchtimes to talk around the question of the ‘aura’ of the work of art, famously described by Walter Benjamin as being absented from the work produced in the The Work of Art in the Age of Mechanical Reproduction (1936), and what the further implications for this might be in the age of digital high resolution video reproduction. My response was to return to glitch as an index of digital materiality and speculate what this might mean now that Standard (SD) has given way to High Definition (HD). My position, delivered as a kind of polemical rant, was an appeal to an imperative to glitch-up the medium, a delinquent reaction to the new hegemony of high definition, in short a call to “fuck shit up”.

We are, it seems, past the cusp of a transition and as the critical mass of video media shifts from SD, HD has become dominant, on consumer and professional levels, in the cinema, on television, in gallery-based artists’ work. Is it possible anymore to purchase a new TV that isn’t HD-ready, is it possible now to buy a camcorder that isn’t HD? It would seem, anecdotally at least, increasingly not.

While HD moving image media has indeed become commonplace in gallery-based artists’ work, Flaxton’s attempt to highlight the effects of its specificity is rare in this context. Ed Atkins, in a recent essay, discusses HD in such a way that seems on the face of it to accept the promise of its verisimilitude, while critiquing its effect, writing that “High Definition (HD) has surpassed what we tamely imagined to be the zenith of representational affectivity within the moving image, presenting us with lucid, liquid images that are at once both preposterously life-like and utterly dead.” The problem with HD for Atkins is that it is “…a ‘hollow’ representation, eternally distanced from life, from Being.” This is a paradox that rests, according to Atkins, on the ontological contradiction that HD is “essentially immaterial” and that this is “…concomitant to its promise of hyperreality – of previously unimaginable levels of sharpness, lucidity, believability, etc., transcending the material world to present some sort of divine insight. Though of course, HD’s occasion is entirely based upon the fantastic representation of the material and only the material.”

For Atkins the ostensible success of the medium, its realism, its astonishing representational ability, paradoxically renders what it represents as dead, dead in the sense that the very theatricality of what is represented – in his essay the image of Johnny Depp in Michael Mann’s Public Enemies (2009) – is revealed for what it is, and for not being an image of a ‘real’ life, it is an image of death, and as such a second twist of the paradox comes into play, that as an image of death it reveals the mortality of the ‘real’ Johnny Depp.

In identifying these representational phenomena as qualities of HD Atkins characterises it as existing within the Zeitgeist as it “both apprehends the progress [of the drive towards ever improved realism] and helps it on its way… It’s ambiguous yet minted enough to be understood as both transitory (how high is ‘High’?) and specific (‘Definition’).” However this perfect and perfectly dead image is in reality, the result not of a digitally immaterial medium, but the very material application of software, codecs and faster processing, as the Zeitgeist reflects the demands of the industrial media complex for ever higher resolutions, working hard to ensure an illusion of immateriality through ever improved realism. This is the reproduction of a photo-realistic vision of the world that has for centuries been locked in by grids, planes and lenses, enshrined in the conventions of Euclidian perspective, as the default condition for representational realism.

Bitmapping, colour, codec, layers, grading, sprites perspective, projection and vectors, in Making Space (Senses of Cinema, Issue 57) Sean Cubitt demonstrates how developments in compression software work to maintain illusions of spatial movement in high resolution cinema; while he admits that “…we are still trying to understand what it is that we are looking at now…” it is clear that how whatever it is becomes visible is the result of some highly sophisticated processes and processing. He describes how this is forged through complex matrices of raster grids, bitmap displays, and hardwired pixel addresses, that as digital images are compressed, crushed, some more than others depending on the delivery platform (from YouTube to BluRay and beyond), the illusion of movement relies on a dizzying array of operations of vector prediction, keyframing and tweening in Groups of Blocks, how layers of images have become key components of digital imaging in creating representational space through the parallax effect whereby relative speed stands in for relative distance and the fastest layer appears to be closest to the viewer.

So, digital moving images are not simply the product of invisible and vaguely immaterial technology; due to physical limits on storage space and bandwidth in its display, the digital moving image is very much dependent on software and hardware to construct the illusion of high resolution; far from leaving media specificity behind, once the dust of apparent verisimilitude has settled or been stirred up, once the seductive veneer of the image has become commonplace or surpassed by ever higher definition, there may be much for a digital materialist to find in post-media medium specificity.

But, digital moving image media’s apparent detachment from a physical base or specific material apparatus has been accelerated with HD. Cameras record directly to drive or card, exhibition is less likely to be through playback from dedicated physical media like tape and optical disc, but more likely to be from a hard drive of some description. Whether this is dedicated moving image equipment or the ubiquitous disk found on a local computer or network, physically and technologically it will be indistinguishable and could equally be used to store and play back sound, display text, image, the internet, a spreadsheet, an eBook, or any given combination of those things and countless others. But media forms have historically been tethered to physical material, and in reality this is no less the case with the migration of media onto digital technology, as N Katherine Hayles points out in Writing Machines “materiality is as vibrant as ever, for the computational engines and artificial intelligence that produce simulations require sophisticated bases in the real world”. However we have seen that in the digital domain materiality no longer demands physical specificity, so it is more productive to conceive of media specificity as having taken something of a metaphysical turn. While they may rely on the same physical support of hard drive machinery, specific digital media can now be best thought of as discrete ‘metaphysical objects’ – as things that we still call ‘films’, ‘photographs’, ‘sounds’, ‘poems’, ‘recipes’ – but objects nonetheless, some we might call invoices, others we will call artworks. How less of a real object is a virtual cat, chair or banana, than their physical equivalents? They all exist in the world as entities with their own essence and ontology. Medium specificity simply distinguishes media objects of a different nature, determining the medium’s essential qualities as an object separate from other objects in the world.

The essence of the medium or format, like the essence of any object, is never fully approached or appreciable, the whole of the object is never apprehended all at once; traces of its essence however are on occasions visible: the grain of the film betrays its photo-chemical nature, the scratch its physical material, etc. Remediation has ensured that the material tropes of physical analogue moving image media forms have become thoroughly subsumed into HD, but as effect rather than as material essence. Essences and questions of materiality can also be applied to electronic and digital media; as the ‘whole’ of the object is never appreciated and, like indexicality and hapticity, is unconstrained by notions of physicality, media can be considered as objects, or mega-objects, with qualities of ontologically equal, or at least non-competing status as material objects. HD as a medium isn’t some kind of dematerialized digital state of imminence ready to emulate and then better pre-existing analogue media forms, it has its own visual representational qualities made possible by a material base oriented as a manifested object as a specific

thing it itself.

In the development of his object-oriented philosophy, Graham Harman takes Heidegger’s formulation of the tool-being, starting with the broken tool analogy that a piece of equipment reveals itself as a discrete object once it stops being useful. HD can also be described in this way, it’s existence as a medium is not noticed until it no longer functions in the invisible mediation of information. Glitch effects break the medium revealing something of its essence as intended or otherwise artifacts of malfunctioning code, compression or hardware, as HD becomes commonplace weird artifacts with exotic names like macroblocking and mosquito noise are becoming everyday experiences.

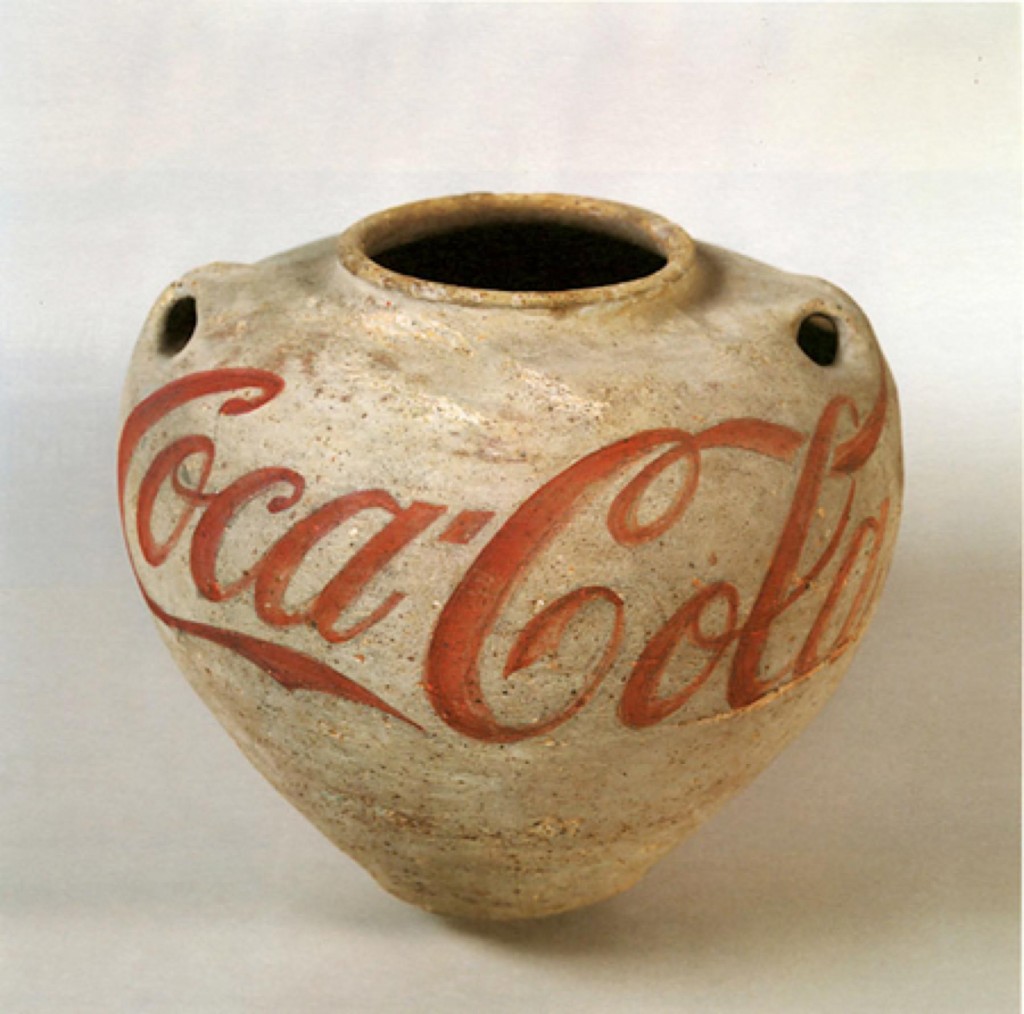

Harman extends tool analysis to all objects and in this sense the tool isn’t an object that is “used”, it simply ‘is’ and that “…to refer to an object as a “tool-being” is not to say that it is brutally exploited as means to an end, but only that it is torn apart by the universal duel between the silent execution of an object’s reality and the glittering aura of its tangible surface.” (Graham Harman, ‘Object-Oriented Philosophy’, Towards Speculative Realism, 2009). In returning to Walter Benjamin’s assertion about the dubious status of the auratic in mechanical, electronic and digital reproduction as investigated in Terry Flaxton’s discussions above, we can propose that in Harman’s terms a digital medium conforms to the conditions of being an object: its visible manifestation has a tangible audio visual surface, it has aura, but it is also an object which draws attention to itself as such when it is broken, glitch artifacts, which is to say the broken workings of the code, compression and hardware, attest to its essence and its materiality.

Critical examinations of moving image medium specificity in art and cinema have been predicated on a critique of use of the media by cultural practitioners, such as Rosalind Krauss in A Voyage on the North Sea: Art in the Age of the Post-Medium Condition (2000) or Noël Carroll in Theorizing the Moving image (1996), which while diverging from Greenbergian Modernism occupy more or less the same predominantly humanist critical ground where art objects and media are framed solely in relation to the human producer and reception. However while human art practice moves away from specificity, inventing a world of relationism, process, and the ongoing project, the medium and the object have not simply ceased to exist.

Graham Harman offers the tantalizing assertion that “…the dualism between tool and broken tool actually has no need of human beings, and would hold perfectly well of a world filled with inanimate entities alone.” Where could this take us as a speculative object-orientated metaphysical materiality, conceiving of a post medium specificity which attends to the materiality of the media object, that has an essence, ontology and contingency at least equal in value and status to that of the artwork, the artist, or any other object in the world?